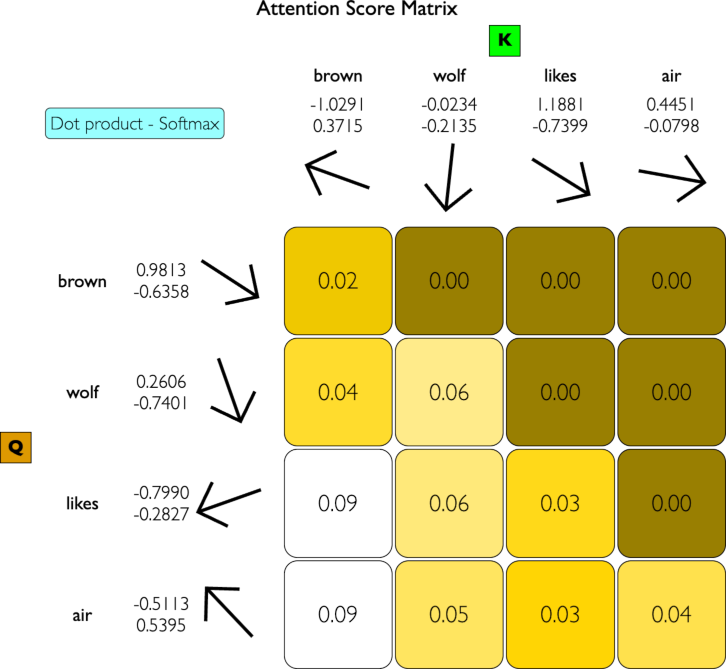

The rows show the words for which contextual reference is being sought. The columns provide the answer. If

the vectors run in parallel, expressed by a large dot product, then the word has a high relationship to the

corresponding other word in the sequence.

In language generation tasks, the model predicts the next word based on the previous words. By masking out

future words, the model is forced to only consider the context of the words it has already seen, ensuring a

causal dependency. This way, the prediction of each word depends only on the preceding words and not on the

words that come after.

To summarize, masking out scores for words that come after the current word in self-attention ensures that

the model generates text in a logical and sequential manner, maintaining causal dependencies and preventing

information leakage.

Transformation of each token in the input sequence (X) into the (Q), (K) and (V) vectors using the learned

weights

(Wk),

(Wq) and (Wv)

Create the dot product that results in an attention score (A) indicating relevance between words.

To obtain the output embeddings (O), you perform a matrix multiplication of the attention score matrix (A)

with the value matrix (V). This operation combines the value vectors using the attention scores as weights,

resulting in the final context vectors, which are the output embeddings.

In essence, the matrix multiplication

is a compact way of expressing the summation operation where each context vector

(Ci)

is the weighted sum of the value vectors

(Vj), with weights given by the attention scores

(Aij). This process integrates the information from the entire input sequence, influenced by the

attention

mechanism, to form the final context vectors or output embeddings (O).

This software was created using Vibe Coding by a Large Language Model LLM / chatbot

and reworked in look & feel.

As a result, neither Copilot nor Gemini nor Chat-GPT (as of February 2025) could create the LLM, but only a

framework code. Many problems such as backpropagation in the attention layer or convolution to generate the

MLP mapping for the sequence had to be programmed on foot.

Nevertheless, the bots were a great help.

It should be noted that the final implementation was based on JavaScript Tensorflow, for which there is

significantly less trainable example code. The bots had to creatively find solutions using analogies.

The following prompts were used on Copilot to create a python example llm5.py

"create a python tensorflow model for an llm. Use 2 dimenstional embeddings. Train the model on a

sample text corpus. implement a predict function that takes a text as input and outputs a probability

distribution for the next token."

"run a softmax on the predictions and print them line by line"

"where is the q,k,v attention layer in the createmodel"

"Iterating over a symbolic KerasTensor is not supported."

"should the predict sequence not hold the whole senence"

"use a CustomMultiHeadAttention class instead"

"shapes used to initialize variables must be fully-defined (no `None` dimensions). Received:

shape=(None, 128) for variable path='dense_4/kernel'"

"you need to change dense_layer = Dense(128, activation='relu')(flatten_layer) instead"

"dense_layer = Dense(128, activation='relu')(flatten_layer) throws shapes used to initialize variables

must be fully-defined"

"graph execution error Only one input size may be -1, not both 0 and 1 in model.fit"

"only one input size may be -1, not both 0 and 1 [[{{node functional_1/flatten_1/Reshape}}]]

[Op:__inference_multi_step_on_iterator_2309] File "/Users/ichapple/Documents/Python/llm5.py", line 90,

in model.fit(X, y, epochs=100, verbose=1)

tensorflow.python.framework.errors_impl.InvalidArgumentError: Graph execution error: Detected at

node functional_1/flatten_1/Reshape"

"CustomMultiHeadAttention returns shape with null"

"CustomMultiHeadAttention has _shape = (None, None, 8)"

The following prompts were used on Gemini to translate the python to javascript and to solve several

problems.

"create a single webpage translate llm5.py to javascript and insert it to the webpage"

"llm5.py has its own training methods there is no need to specify weights and bias. translate the

python one to one to javascript so it will work exactly the same"

"it throws: Class being registered does not have the static className property defined."

"generic_utils.js:243 Uncaught n: Unknown initializer: glorot_uniform. This may be due to one of the

following reasons:

The initializer is defined in Python, in which case it needs to be ported to TensorFlow.js or your

JavaScript code.

The custom initializer is defined in JavaScript, but is not registered properly with

tf.serialization.registerClass()."

"topology.js:143 Uncaught TypeError: Cannot read properties of null (reading 'length') in model.add(new

CustomMultiHeadAttention({key_dim: embedding_dim, num_heads: num_heads, name: 'mha', kernel_initializer:

'glorotNormal', bias_initializer: 'zeros'})); // tf.customLayer"

"topology.js:773 Uncaught (in promise) TypeError: Cannot read properties of undefined (reading 'rank')"

"util_base.js:153 Uncaught (in promise) Error: Error in matMul: inner shapes (8) and (2) of Tensors

with shapes 8,3,8,8 and 8,3,2,8 and transposeA=false and transposeB=false"

"tensor_util_env.js:92 Uncaught (in promise) Error: Argument 'x' passed to 'floor' must be float32

tensor, but got int32 tensor"

"display a html table with the trained vocabulary use one row for each token, display the index, name

and the embeddings of each token."

"for each head display html tables for the q,v,k matrices. Also display a html table with the attention

scores"

"tensor.js:461 Uncaught (in promise) Error: Tensor is disposed.

at e.value (tensor.js:461:13)

at r5.slice (slice.js:32:8)

at displayAttentionVisualizations (a4.html:408:80)

at async predict_next_token (a4.html:309:14)"

"add position embeddings to the code and display them as a html table"

"you did not add positionEmbeddingLayer to the model so it is not part of the training process"

"but you apply the position_tensor only in the predict_next_token method but it is not used in the

train method"

"instead of manually adding the position embeddings in the prodict and train method it would be better

to make it part of the model. Can you do that"

"const positionTensor = tf.tensor2d([positions], [batchSize, seqLength], 'int32'); throws Uncaught (in

promise) Error: Based on the provided shape, [8,3], the tensor should have 24 values but has 3"

"why are you doing this: const predicted_prob = model.predict(embeddingsAfterTensor).dataSync();

instead of const predicted_prob = model.predict(input_tensor).dataSync();"

"But the positional embeddings are already added in the model with the custom AddPositionEmbedding

class"

"it is still redundant because also the norm layer is part of the model so

model.predict(input_tensor).dataSync() should be enough"

"for the positions could you implement a sinus / cosines curve"

"positionEmbeddingsTensor = addPosLayer.positionEmbeddingLayer.getWeights()[0]; does not work"

"explaint the CustomMultiHeadAttention class with respect to self attention"

"If the sequence_len = 3 then for 2 heads there should be 2 attention_scores matrices with 3 by 3

values, giving a total of 18 scores in attention_scores right ?"

"so for a batch_size of 1 the shape would be [1,3,2,3] ?"

"but when I run the code it gives me [1,3,2,2] attention_scores"

"query_reshaped and key_reshaped both have shape 1,3,2,2 is this correct ?"

"but this.attention_scores = tf.matMul(this.query_reshaped, this.key_reshaped, true); computes the

score matrix which we agree should have shape 1,3,2,3 but it turns out to be 1,3,2,2. Is there anything

wrong with the matMul ?"

"The problem could be solved by changing the cols (batch_size, seq_len, num_heads, key_dim) to

(batch_size, num_heads, seq_len, key_dim) then when transposing it would give [1, 2, 3, 2] matmul [1, 2,

2, 3] which would yield [1, 3, 2, 3] is that right ?"

"implement a Top-k Sampling sampling for a given array of softmax values jusing javascript only"

At this point the code was not yet executable and the lengthy troubleshooting and implementation of missing

features began with the support of Gemini, Copilot and Chat-GPT.

Embedding Vectors: Each word in the input sequence is converted into an embedding vector,

which is a

dense representation of the word in a high-dimensional space.

These embedding vectors are then transformed into query vectors (Q) and key vectors (K) through

learned weight matrices.

This transformation allows the self-attention mechanism to compare queries and keys and calculate

attention scores, determining how much focus each word should have in relation to the others in the

input sequence.

Embedding Vectors: Each word in the input sequence is converted into an embedding vector,

which is a

dense representation of the word in a high-dimensional space.

These embedding vectors are then transformed into query vectors (Q) and key vectors (K) through

learned weight matrices.

This transformation allows the self-attention mechanism to compare queries and keys and calculate

attention scores, determining how much focus each word should have in relation to the others in the

input sequence.